Research

Robotics

Pose Estimation

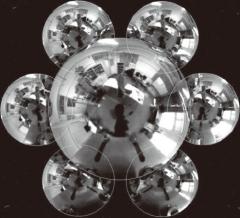

Images obtained from a moving camera contain information about the motion of the camera itself. For example, if the camera movement is translational or rotational, the captured images have motion flows according to the camera motion. We can estimate the motion of the camera or that of the robot equipped with it by analyzing the flows in the images. Normal cameras have narrow field of view, and it is difficult to identify translation and rotation since the flows cased by these motions are similar to each other in the narrow field of view.

We solve this ambiguity between flows of translation and rotation by using an omnidirectional camera with wide field of view. We extend this idea to a stereo omnidirectional camera. The stereo observation also helps to estimate the camera motions as well as wide field of view since it can obtain object depth in a scene.

We solve this ambiguity between flows of translation and rotation by using an omnidirectional camera with wide field of view. We extend this idea to a stereo omnidirectional camera. The stereo observation also helps to estimate the camera motions as well as wide field of view since it can obtain object depth in a scene.

Journals (Peer-reviewed)

- Trung Thanh Ngo, Yuichiro Kojima, Hajime Nagahara, Ryusuke Sagawa, Yasuhiro Mukaigawa, Masahiko Yachida, Yasushi Yagi

Real-time Estimation of Fast Egomotion with Feature Classification using Compound Omnidirectional Vision Sensor

IEICE Transaction on Information and Systems, Vol.E93-D, No.1, pp.152-166, 2010.01

BibTeX

International Conferences (Peer-reviewed)

- Trung Ngo Thanh, Hajime Nagahara, Ryusuke Sagawa, Yasuhiro Mukaigawa, Masahiko Yachida, Yasushi Yagi

Robust and Real-Time Egomotion Estimation Using a Compound Omnidirectional Sensor

Proc. IEEE Int. Conf. Robotics and Automation, pp.492-497, 2008.03

BibTeX - Trung Thanh Ngo, Hajime Nagahara, Ryusuke Sagawa, Yasuhiro Mukaigawa, Masahiko Yachida, Yasushi Yagi

Robust and Real-time Rotation Estimation of Compound Omnidirectional Sensor

Proc. IEEE Int. Conf. Robotics and Automation, pp.4226-4231, 2007.04

BibTeX

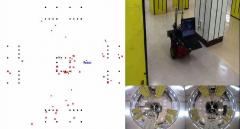

Simultaneous Localization and Mapping

An autonomous robot is required to estimate a structure and its location from visual information in an unknown environment like humans and animals do. To address this challenge, simultaneous localization and mapping (SLAM) have been actively studied that allow a robot to estimate the mapping of the environment and localize its position on the map at the same time. There has been a lot of research in visual SLAM, which uses a camera or a similar visual sensor, and the research has been mainly classified into categories using binocular and monocular cameras. Each of them has some advantages and disadvantages, such as that the binocular method has the stereo matching issue and the monocular method lacks a scale factor. Hence, neither the binocular nor the monocular techniques are perfect.

We proposed an innovative hybrid SLAM method that combines the advantages of the binocular and monocular approaches. Our method provided accurate localization and dense environmental maps generated by switching between binocular and monocular estimators against each landmark in the environment as required according to the estimation condition.

We proposed an innovative hybrid SLAM method that combines the advantages of the binocular and monocular approaches. Our method provided accurate localization and dense environmental maps generated by switching between binocular and monocular estimators against each landmark in the environment as required according to the estimation condition.

International Conferences (Peer-reviewed)

- Trung Ngo Thanh, Yusuke Sakaguchi, Hajime Nagahara, Masahiko Yachida

Stereo SLAM Using Two Estimators

Proc. IEEE Int. Conf. Robotics and Biomimetics, No.M1-1, pp.19-24, 2006.11

BibTeX